Conversational Retrieval#

Building on the limitations of single-turn retrieval systems discussed in the previous chapter, there is growing interest in developing conversational music retrieval capabilities that can maintain context across multiple interactions. This chapter explores how conversational approaches could address the fundamental limitations of current systems while creating more natural and interactive music discovery experiences.

Limitations of Single-Turn Systems#

As discussed previously, current music retrieval systems face several key limitations due to their single-turn nature:

Loss of valuable search context between queries

Inability to learn from user feedback and previous interactions

Limited support for natural query refinement

Missed opportunities for guided music exploration

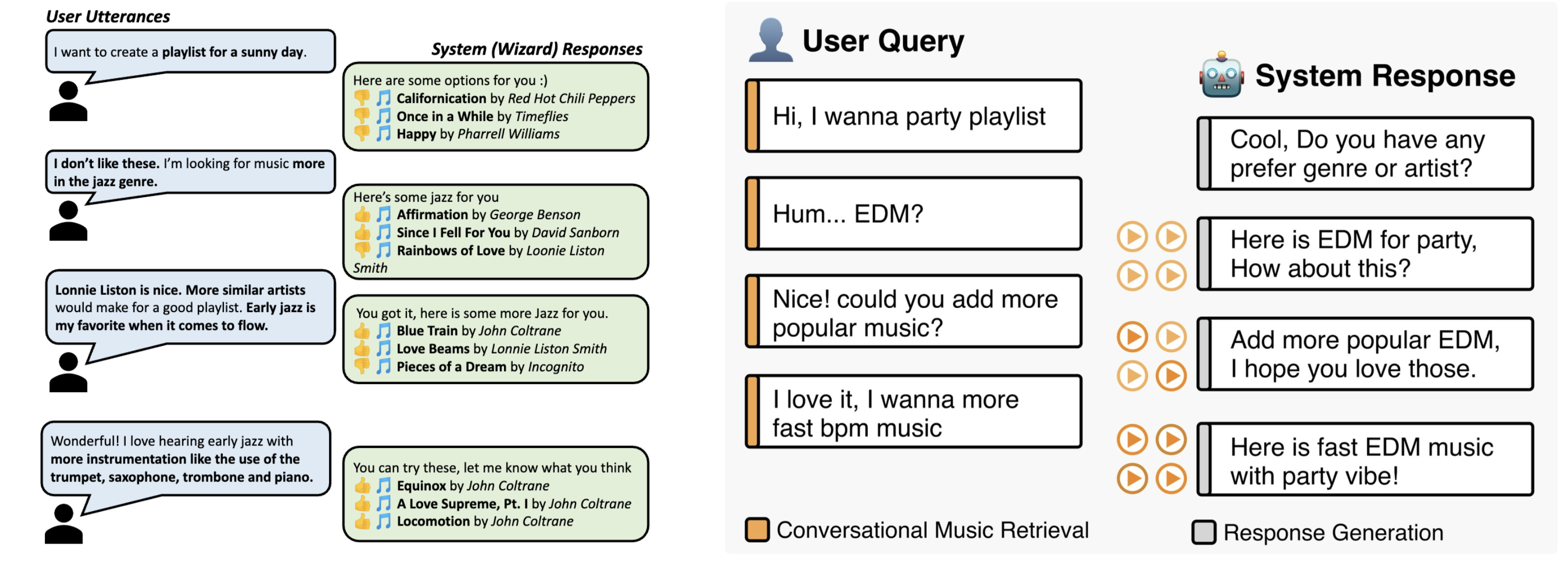

Conversational music retrieval represents a promising direction to address these limitations by enabling multi-turn interactions through chat-based interfaces. Unlike conventional systems where each query is processed independently, conversational approaches maintain dialogue history to build deeper understanding of user needs over time.

Key Benefits of Conversational Retrieval#

The conversational approach offers several important advantages, as demonstrated by recent research efforts in developing conversational music retrieval datasets. For example, Chaganty et al.[CLZ+23] introduced the Conversational Playlist Curation Dataset (CPCD), containing 917 multi-turn dialogues with an average of 5.7 turns each. Their work highlighted key benefits of conversational retrieval. Additionally, [LZG+23] and [DCK+24] address the challenge of limited multi-turn retrieval data through synthetic music discovery conversation generation, leading to improved performance.

Beyond just datasets, these works discuss the benefits and future directions of conversational music retrieval models. They emphasize that conversational approaches enable more natural and intuitive music discovery by maintaining dialogue context and learning from user feedback over time. The research suggests that future models should focus on better understanding user intent through conversation history, generating more engaging responses, and developing more sophisticated retrieval mechanisms that can handle nuanced musical queries and preferences expressed through dialogue.

Key Technical Challenges#

However, building effective conversational music retrieval systems presents several significant technical challenges:

Joint Retrieval and Response Generation: The system must not only retrieve relevant music but also generate appropriate conversational responses. This requires careful integration of retrieval and language generation capabilities.

Context Integration: Previous conversation history needs to be effectively incorporated into both the retrieval and generation processes to maintain coherent dialogue.

Beyond Simple Similarity: Traditional embedding similarity measures may not be sufficient for capturing the nuanced relationships needed in conversational search.

Natural Query Refinement: The system needs sophisticated understanding to handle comparative and refinement-based queries (e.g., “like this but more upbeat”).

Future Directions#

Several promising research directions are emerging to address these challenges:

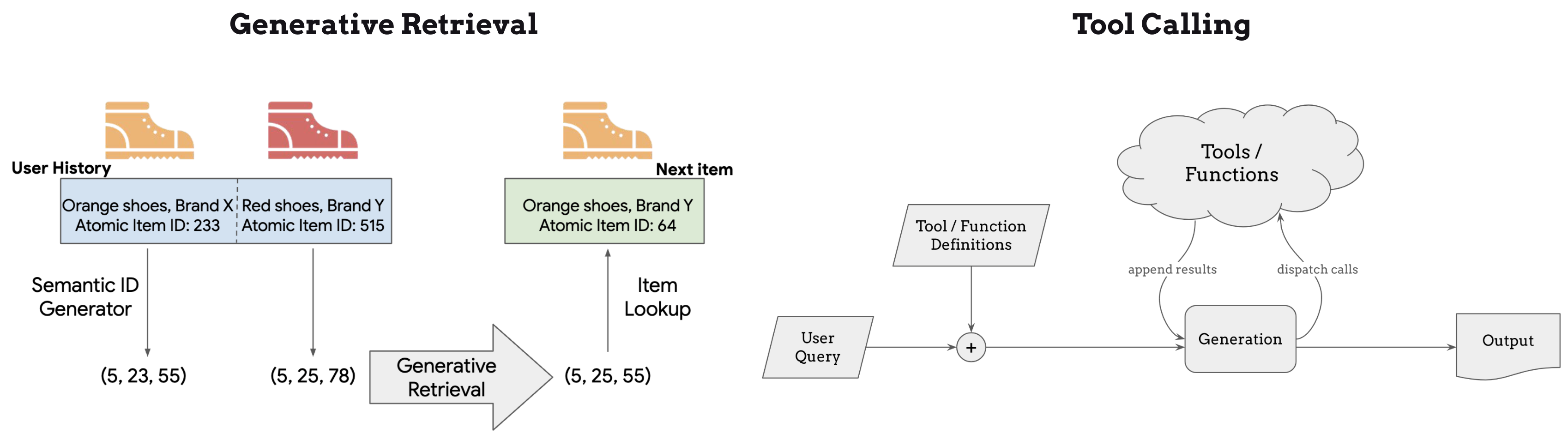

Generative Retrieval: Moving beyond pure similarity-based retrieval to approaches that can generate or compose queries based on conversation context

Tool-Calling Systems: Leveraging large language models that can call retrieval systems as tools, enabling natural language interactions while maintaining robust search capabilities through specialized retrieval components

While significant challenges remain, conversational music retrieval represents an important evolution in how we interact with and discover music. Success in this area could dramatically improve the music search experience by making it more natural, contextual, and effective at serving users’ diverse musical needs through sustained dialogue.

References#

Arun Tejasvi Chaganty, Megan Leszczynski, Shu Zhang, Ravi Ganti, Krisztian Balog, and Filip Radlinski. Beyond single items: exploring user preferences in item sets with the conversational playlist curation dataset. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval. 2023.

SeungHeon Doh, Keunwoo Choi, Daeyong Kwon, Taesu Kim, and Juhan Nam. Music discovery dialogue generation using human intent analysis and large language models. arXiv preprint arXiv:2411.07439, 2024.

Megan Leszczynski, Shu Zhang, Ravi Ganti, Krisztian Balog, Filip Radlinski, Fernando Pereira, and Arun Tejasvi Chaganty. Talk the walk: synthetic data generation for conversational music recommendation. arXiv preprint arXiv:2301.11489, 2023.