Connecting Music Audio and Natural Language#

Welcome to the online supplement for the tutorial on “Connecting Music Audio and Natural Language,” presented at the 25th International Society for Music Information Retrieval (ISMIR) Conference, from November 10–14, 2024 in San Francisco, CA, USA. This web book contains all the content presented during the tutorial, including code examples, references, and additional materials that delve deeper into the topics covered.

Motivation & Aims#

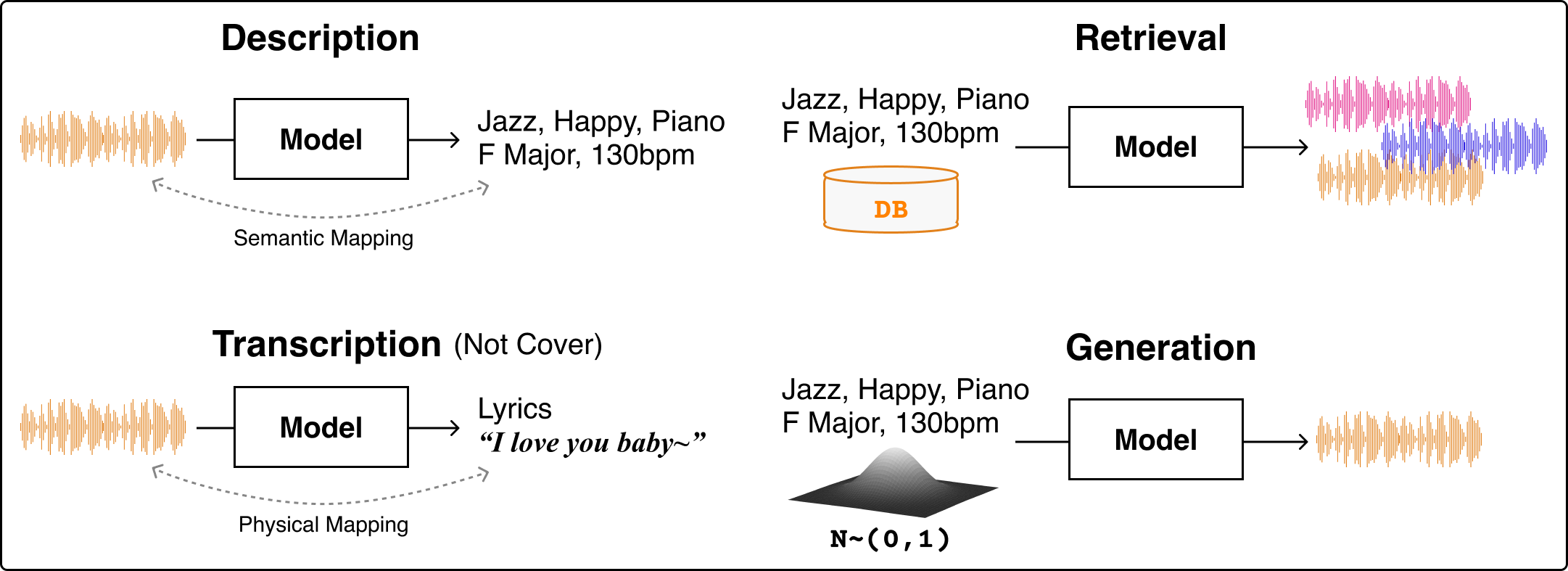

Language serves as an efficient interface for communication between humans as well as between humans and machines. With the recent advancements in deep learning-based pretrained language models the understanding, search, and creation of music are now capable of catering to user preferences with diversity and precision. This tutorial is motivated by the rapid advancements in machine learning techniques, particularly in the domain of language models, and their burgeoning applications in the field of Music Information Retrieval (MIR). The remarkable capability of language models to understand and generate human-like text has paved the way for innovative methodologies in music description, retrieval, and generation, heralding a new era in how we interact with music through technology.

Fig. 1 Overview of frameworks with connections between music and language. In this tutorial, we focus on explaining description, retrieval, and generation. However, we do not include transcription in our discussion.#

We firmly believe that the MIR community will derive substantial benefits from this tutorial. Our discussion will not only highlight why the multimodal approach of combining music and language models signifies a significant departure from conventional task-specific models but will also provide a detailed survey of the latest advancements, unresolved challenges, and potential future developments in the field. In parallel, we aim to establish a solid foundation to encourage the participation of emerging researchers in the domain of music-language research, by offering comprehensive access to relevant datasets, evaluation methodologies, and coding best practices, thereby fostering an inclusive and innovative research environment.

Getting Started#

This web book consists of a series of Jupyter notebooks, which can be explored statically on this page. To run the notebooks yourself, you will need to clone the Git repository:

git clone https://github.com/mulab-mir/music-language-tutorial/ && cd music-language-tutorial

conda create --name p310 python==3.10 && conda activate python

Next, you should install the dependencies:

pip install -r requirements.txt

And finally, you can launch the Jupyter notebook server:

jupyter notebook